When was the last time you sat down to review a customer conversation?

I know, it’s tough. How do you measure something like sounding helpful or making a customer feel heard? Without a clear structure, it’s easy to rely on gut instinct. But that leads to inconsistent feedback and missed chances to coach your team.

That’s where a quality assurance scorecard comes in.

A good scorecard is a crucial tool gives you a clear, repeatable way to evaluate support interactions. It helps you see what’s working, what needs fixing, and how to improve—without the guesswork.

In this guide, we’ll show you how to build a QA scorecard from scratch, with simple steps you can start using right away.

Table of Contents

- What is a QA Scorecard?

- What Are the Must-Have Components in a QA Scorecard?

- Why Do You Need a QA Scorecard?

- How to Build a QA Scorecard?

- 4. Establish clear evaluation criteria

- 5. Assign weightage to each metric category

- 6. Set up a simple rating system

- 7. Test the scorecard before rolling it out

- 8. Train everyone who’ll use the scorecard

- 9. Run regular QA review sessions

- 10. Act on what you learn

What is a QA Scorecard?

A Quality Assurance (QA) scorecard is a tool that helps you evaluate how well your team handles customer interactions. It makes sure everyone is following the same standards and gives you a fair way to coach and improve.

Support managers and QA teams use it to rate things like:

- Did the agent greet the customer properly?

- Did they explain the solution clearly?

- Did they follow the right steps?

- Did they solve the problem?

The idea is to take something that usually feels vague—like “good support”—and break it down into clear, simple points.

With a scorecard, feedback becomes easier to give, and your team knows exactly what to work on. Without one, you’re guessing. And that usually leads to uneven service and frustrated customers.

Next, let’s look at building a scorecard that works.

What Are the Must-Have Components in a QA Scorecard?

While every scorecard will differ based on your team’s specific needs, most of them include a few essentials: evaluation criteria, a scoring system, a weighting system, a feedback mechanism, and an action plan.

If you’re building or refining your scorecard, these are the core components to focus on. Let’s walk through them together:

- Define clear evaluation criteria: Identify the key behaviors and skills you want to assess, such as communication, product knowledge, and empathy. Use real call examples to clarify what “good” looks like for each area.

- Choose a consistent scoring system: Pick a rating scale that’s simple to use and easy to interpret, whether it’s 1–5, 1–10, yes/no, or percentages. Make sure every evaluator understands how to apply the scale the same way.

- Assign weights to what matters most: Decide which criteria impact your business outcomes the most. For example, give compliance or resolution a higher weight than greeting or tone if those are more critical in your industry.

- Add a feedback section with examples: Allow reviewers to leave specific, constructive comments. Encourage them to highlight what worked well and what could be improved. This should be backed up with actual snippets from the call or chat.

- Include an action plan prompt: Don’t just stop at scoring; add a section for next steps. Use it to set coaching goals, recommend training, or flag recurring issues that need to be addressed.

A good QA scorecard helps your team stay on track. It can involve fixing issues the right way, talking to customers like humans, and improving the overall experience. But it’s not a one-and-done thing.

Check in on it often and update it when goals change or if your team gives you feedback that something’s off.

Why Do You Need a QA Scorecard?

I used to rely on gut feel to evaluate performance. If a call sounded off, I flagged it. If it felt fine, I moved on.

But over time, I realized something: my instincts were missing patterns. Feedback became inconsistent. Coaching felt reactive. And team performance started to slide.

So I built a QA scorecard and everything changed.

A well-designed QA scorecard helps you do eight important things: deliver consistent feedback, identify performance gaps, drive quality, surface trends, optimize training, track KPIs, empower agents, and improve customer satisfaction.

Here’s how each one works:

- Makes feedback fair and consistent. With this feedback, everyone’s held to the same standard, no matter who’s reviewing. Agents know what success looks like, and reviewers aren’t left to rely on instinct or interpretation.

- Reveals patterns you’d otherwise miss. The scorecard breaks performance down into clear, observable behaviors. That helps pinpoint exactly where each agent or your whole team needs help.

- Drive consistent quality across the board. Scorecards give you a structured, shared definition of quality. This keeps every customer interaction aligned with your brand values and expectations.

- Surface trends you’d otherwise miss. With scorecard data, you’ll start to notice patterns. Maybe one team consistently nails empathy, or another struggles with policy adherence. That’s insight you can act on.

- Optimize training and coaching efforts. Vague advice becomes specific guidance. Instead of saying, “be clearer,” you can say, “let’s work on simplifying your explanations and confirming understanding.”

- Track performance against key support KPIs. Connect your QA insights with key metrics like first call resolution, handle time, and CSAT to get a complete picture of team performance.

- Allow agents to self-correct and grow. When feedback is structured, specific, and fair, agents are more likely to take ownership and actively work on improving.

- Improve customer satisfaction and loyalty. When service quality becomes consistent, customer experience improves. That translates to happier customers, stronger loyalty, and better lifetime value.

This way the agents know what success looks like. Managers know how to coach. And the whole team moves in the same direction.

Without a scorecard, you’re guessing. With one, you’re coaching with confidence.

Now that we’ve covered the “why,” let’s wrap up with a quick recap of everything you need to build a great QA scorecard.

How to Build a QA Scorecard?

A quality assurance scorecard doesn’t need to be complicated. But it does need to be clear, easy to use, and focused on what really matters to your customers.

When done right, it gives your team a practical way to coach, improve, and deliver more consistent service. Here’s how to build one that actually works.

1. Start with clear goals

Before you build your scorecard, get clear on what you want it to do.

Is it to improve customer satisfaction? Help agents grow? Make sure your team is following processes. Whatever it is, write it down—because your goals will guide everything else.

Be specific. Don’t just say “better service.” Say, “We want to improve first call resolution from 65% to 80% in the next three months,” or “We want to cut handle time by 10% this quarter.”

To understand these goals, ask yourself the following questions:

- Are we trying to improve customer satisfaction?

- Do we need to follow compliance rules more closely?

- Are we trying to make support more efficient?

- Do we want to coach agents more consistently?

These clear goals will help choose metrics and evaluation criteria that matter. Let’s take SeatGeek as an example. They used a QA scorecard software and set clear goals and expectations, focusing on operational needs and customer satisfaction metrics.

💡Tip: Involve the leadership in setting goals to ensure alignment with broader organizational goals.

2. Involve your key stakeholders

You can’t build a useful scorecard by yourself. To make it work in the real world, bring in people who see different sides of customer service:

- Team leads know what good performance looks like on the ground.

- QA analysts know how to keep scoring fair and consistent.

- Customer-facing employees can tell you what really happens in conversations, not just what should happen.

💡Actionable steps you can take:

✅ Host a 60-minute workshop with stakeholders to align on what “quality” means.

✅ Ask each participant to list their top three factors for evaluating great service, and then compare and prioritize as a group.

Involving all of them helps you build something that is practical, realistic, and likely to be used.

3. Identify some key questions to answer

Now that you know your goals, the next step is to decide what exactly you’ll score.

Start by asking:

- What do we want to see more of in our customer interactions?

- What are the non-negotiables every agent should follow?

Don’t try to measure everything. Your scorecard should measure what matters most to your customer experience, not just what’s easy to track.

There are two practical ways to organize your scorecard: the 4C Framework and the Pillar Framework. Let’s break them down.

Option 1: The 4C Framework

Created by Jeremy Watkins at NumberBarn, this method focuses on four areas that cover the essentials of a good support interaction:

1. Communicate Clearly and Professionally

- Clarity: Ensures agents convey information clearly and concisely.

- Tone: Maintains a professional and friendly demeanor.

- Active Listening: Demonstrates attentiveness to customer needs.

2. Build Real Customer Connection

- Empathy: Agents understand and relate to customer feelings.

- Personalization: Tailors interactions based on customer history.

- Engagement: Actively involves customers in the conversation.

3. Follow Rules and Protect Customer Data

- Adherence to Scripts: Follows established guidelines during interactions.

- Data Protection: Safeguards customer information and complies with regulations.

- Risk Management: Identifies compliance risks during calls.

4. Solving Issues Correctly and Completely

- Accuracy of Information: Ensures details provided are correct.

- Resolution Quality: Assesses whether issues are resolved effectively in one interaction.

- Follow-Up Procedures: Implements necessary follow-up actions.

Use this if you want a simple, people-first structure to start measuring what really matters in conversations.

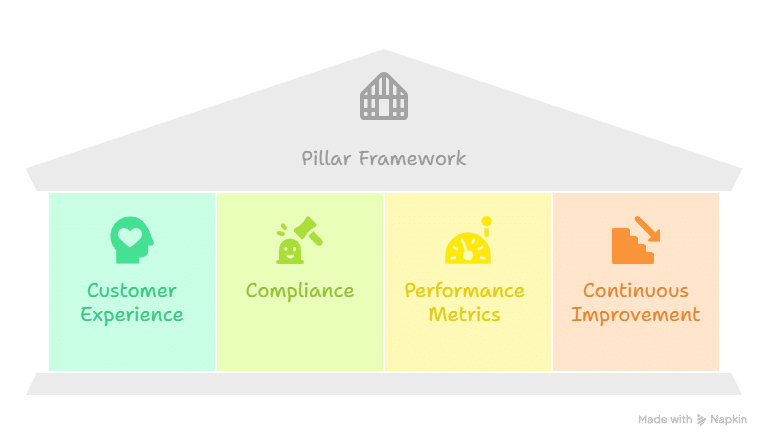

Option 2: The Pillar Framework

This model has a more comprehensive approach to evaluating customer service quality. It organizes the metrics into four broader themes:

- Improve Customer Experience: Use metrics like CSAT and NPS to understand how customers feel after an interaction.

- Compliance: Make sure agents follow internal processes and industry regulations.

- Performance Metrics: Measure things like First Call Resolution (FCR) and Average Handle Time (AHT).

- Continuous Improvement: Use feedback from QA reviews, agents, and customers to guide coaching.

💡Tip: Collaborate with stakeholders to ensure selected metrics are relevant and measurable.

4. Establish clear evaluation criteria

If your metrics are fuzzy, your feedback will be too. And that’s a fast way to stall agent growth.

If you write “Good communication” on a scorecard, everyone will interpret it differently, which will kill consistency.

Break broad ideas into specific, observable behaviors. For example, instead of scoring “communication” as a whole, split it into things like:

✅ Clear Communication:

- Used simple, jargon-free language

- Confirmed customer understanding before closing the interaction

❌ Unclear Communication:

- Used technical terms without explanation

- Gave incomplete or confusing answers

- Failed to confirm resolution

Do this for every item on your scorecard. Make sure each one is easy to rate and easy to explain.

💡Tip: You can write a scoring guide with specific success indicators. Also, use real call/chat examples to illustrate “Exceeds Expectations,” “Meets Expectations,” and “Needs Improvement.” These specific evaluation criteria allow you to have consistent and fair scoring.

Recommended reading

5. Assign weightage to each metric category

Not every behavior has the same impact. Some directly affect customer trust or business outcomes, while others are nice to have.

Weighting helps you focus attention where it counts. It ensures your scores reflect your biggest priorities, like resolving issues accurately or delivering a strong customer experience.

Here’s a simple example:

| Metric | Weight |

|---|---|

| Issue Resolution | 50% |

| Empathy & Tone | 30% |

| Script Adherence | 20% |

In this case:

- Issue resolution carries the most weight—it reduces repeat contacts and builds trust.

- Empathy helps build loyalty and improves customer satisfaction.

- Script adherence protects you from compliance risks.

Use weights to reflect your team’s priorities. And revisit them every 6 months, because what matters today might change tomorrow.

💡Tip: Ensure weight distribution aligns with organizational priorities and customer expectations.

6. Set up a simple rating system

Once you’ve decided what to measure, the next step is figuring out how to score it.

Your rating system should be easy to use and easy to explain. Reviewers need clarity to score fairly. Agents need transparency to know what’s expected of them.

You’ve got a few options:

- Numerical scale – like 1–5 or percentages. Good for tracking trends over time.

- Qualitative scale – like “Exceeds Expectations” or “Needs Improvement,” with space for comments.

- Hybrid approach – combine a number with a short description and optional comments. This gives the best of both worlds.

Whatever you choose, make sure each score has a clear meaning. Don’t leave room for guesswork.

Here’s an example of a simple 3-point scale:

| Score | Description | Example |

|---|---|---|

| 5 | Exceeds Expectations | The agent resolved the issue and went above and beyond—offered additional help, followed up proactively. |

| 3 | Meets Expectations | The agent resolved the issue clearly and professionally but didn’t go beyond the basics. |

| 1 | Needs Improvement | The issue was not fully resolved or the agent’s response was unclear, inaccurate, or unhelpful. |

Use examples like these for every metric on your scorecard, so scoring is consistent, and agents know what great looks like.

💡Tip: During training, walk through real calls or chats and score them together. This builds alignment across reviewers and sets clear standards from day one.

Recommended reading

7. Test the scorecard before rolling it out

Don’t roll out your QA scorecard to the whole team right away. Test it with a small group first to make sure it works in real-world situations.

Start with about 10–20% of your agents and reviewers. Run a pilot for 3–4 weeks.

During the pilot, focus on:

- Spotting unclear questions or criteria: Are reviewers confused about how to score certain items?

- Checking if weights feel right: Are high-priority actions getting the right emphasis?

- Gathering honest feedback: What do agents think of the feedback they’re getting? Does it help them improve?

Use what you learn to make small fixes before launching at scale. This helps you avoid messy changes later.

💡Tip: Track results week by week. Refine as you go. And document what changes you make and why—it’ll save time during the full launch.

8. Train everyone who’ll use the scorecard

Even the best scorecard won’t work if people don’t know how to use it.

If agents don’t understand how they’re being scored, they’ll feel blindsided. If managers aren’t aligned on scoring, you’ll get inconsistent reviews.

Make training mandatory for both reviewers and agents. Here’s what to include:

- A quick overview of your QA goals: what are you trying to improve and why?

- A clear walkthrough of each scoring category: what to look for, and what each score means

- Real examples of great (and not-so-great) feedback

Take it a step further by running call review exercises. While doing this, agents can score a few sample conversations themselves. This helps them see the process from the reviewer’s side and understand the logic behind their scores.

💡Tip: Host open Q&A sessions during training. Let agents ask questions, challenge unclear criteria, and walk away with full clarity. That’s how you get buy-in and build trust in the system.

Recommended reading

9. Run regular QA review sessions

A scorecard can only be useful if you keep learning from it. Set a fixed rhythm of weekly, bi-weekly, or monthly reviews, based on your team size and ticket volume.

Use these sessions to:

This consistency is the key to maintaining a successful quality assurance scorecard. These reviews need to monitor performance trends, identify areas for improvement, and celebrate your team’s successes.

- Track performance trends using dashboards

- Spot recurring issues early

- Celebrate top performers and small wins

The goal isn’t just to score, it’s to coach. Use these sessions to give targeted feedback and help agents understand what to work on. This builds trust and reinforces that QA isn’t just about pointing out mistakes, it’s about helping everyone get better.

💡Tip: Treat QA reviews as collaborative sessions. Let agents bring questions, discuss edge cases, and share wins.

10. Act on what you learn

Customer expectations change, and so should your scorecard. Use the insights from your reviews to adjust the scorecard to meet changing needs.

Review and update your scorecard every 3 to 6 months. Take a hard look at what’s working—and what’s not.

- Are certain categories always scoring high or low?

- Are agents confused about what counts as a “5”?

- Are you still measuring what matters most to your business and your customers?

Don’t just collect data, act on it. Use your review sessions to tweak scoring criteria, rebalance weights, or refine what “great” looks like based on real conversation.

Types of QA Scorecards + Examples & Templates

Not every team works the same way, and your QA scorecard shouldn’t either. The right scorecard depends on what you’re trying to improve. Are you focused on one-on-one interactions? Process compliance? Team performance over time?

In this section, we’ll break down the most common types of QA scorecards. Let’s start with the most widely used one: Interaction-Based Scorecards.

1. Interaction-based scorecards

Customers remember the conversation, not the channel. Whether it’s a call, chat, or email, what really matters is how the agent handles that moment.

This type of scorecard is built to evaluate the quality of one-on-one conversations. It scores how clearly the agent communicates, how empathetic they are, and whether they resolved the issue fully. If you’re using this format, your criteria should cover tone, clarity, listening, and resolution. These moments are the ones that directly shape the customer’s experience.

Interaction-based scorecards are best for teams looking to improve real-time service quality and drive consistency across agents. You assess one interaction at a time, from greeting to closing, and evaluate how well the agent handled it end-to-end.

MeUndies, a DTC apparel brand, uses this type of scorecard to maintain consistent service during peak seasons, especially when hiring temporary agents. They evaluate interactions for tone, brand alignment, and issue resolution to ensure customers always get the same quality of support.

✅ Best For: Call centers, live chat teams, email support, retail customer service.

✅ Key Focus Areas: Communication, problem resolution, professionalism.

| Metric | Criteria | Score (1-5) |

|---|---|---|

| Greeting | Was the introduction friendly & professional? | |

| Tone & Empathy | Did the agent acknowledge the customer’s concern? | |

| Active Listening | Did the agent clarify & confirm understanding? | |

| Resolution Quality | Was the issue fully resolved within the call/chat? | |

| Closing | Did the agent thank the customer & summarize the next steps? |

💡 Pro Tip: Add a customer sentiment analysis field (Happy, Neutral, Unhappy) to gauge real-time responses.

2. Process-adherence scorecards

In industries like healthcare or finance, following the right steps is essential. Even if the tone is friendly or the response is fast, skipping a process or missing a compliance step can be risky.

Process-adherence scorecards help you evaluate whether agents are sticking to required protocols. They’re designed to prioritize accuracy, consistency, and regulatory compliance over soft skills like empathy.

If you’re using this type of scorecard, your criteria should focus on whether specific actions were completed, such as identity verification, proper documentation, compliance warnings, and secure data handling. Think of it as a checklist to ensure no critical step is overlooked.

For example, a healthcare provider might use a process-adherence scorecard to ensure agents verify patient identity, record case notes correctly, and follow HIPAA protocols during every interaction. Any deviation could lead to compliance risks, so each step is scored as either completed or missed.

✅ Best For: Healthcare, finance, insurance, and legal services.

✅ Key Focus Areas: Compliance, Documentation accuracy, SOP adherence, and legal disclaimers.

The QA scorecard template you can use for process adherence:

| SOP/Process Step | Compliance Level (Yes/No) | Notes |

|---|---|---|

| Patient Verification | Was the customer’s identity verified correctly? | |

| Documentation Complete | Did the agent update records accurately? | |

| Compliance Warning Given | Did the agent provide the necessary compliance disclaimer? | |

| Data Protection | Were confidential details handled appropriately? |

This scorecard works best as a checklist. Each item is either compliant or not. This makes the evaluation simple and compliance easier to track across large teams.

💡 Pro Tip: Use a checklist-style scorecard for binary compliance evaluations (Yes/No or Pass/Fail).

3. Outcome-focused scorecards

Sometimes, what matters most isn’t how the interaction went, it’s whether the customer got what they needed. Did the issue get resolved? Did the customer leave satisfied? Was a follow-up required?

Outcome-focused scorecards help you measure the results of support interactions. They shift attention away from how the agent handled the process, instead of what the support achieved.

Your criteria should be tied to performance metrics like First Call Resolution (FCR), CSAT, escalation rates, and repeat contact frequency. These numbers reveal whether your support is working.

For example, Replo, a SaaS company that builds landing pages for Shopify, uses an outcome-focused QA scorecard through Zendesk. Their team tracks metrics like First Call Resolution (FCR), CSAT, and escalation rate to identify top performers and spot areas for coaching.

✅ Best For: Tech support, SaaS, online retail, success teams, and e-commerce industries.

✅ Key Focus Areas: Resolution success (FCR, ticket closure rates), Customer satisfaction (CSAT, NPS), and Escalation and repeat contact rates.

Here is an outcome-focused QA scorecard template:

| Metric | Target Value | Actual Value |

|---|---|---|

| First-Call Resolution (FCR) | ≥80% | |

| Customer Satisfaction (CSAT) | ≥90% | |

| Escalation Rate | ≤5% | |

| Repeat Contact Rate | ≤10% |

Each metric is tied to a threshold so agents and reviewers know exactly what “good” looks like.

💡 Pro Tip: Make success visible. Give agents clear thresholds so they know what great looks like (eg, 90% CSAT or less than 5% escalations).

4. Hybrid scorecards

Some teams need to balance empathy, compliance, and outcomes without sacrificing one for the other. That’s where hybrid scorecards come in.

Hybrid scorecards combine elements from interaction-based, process-adherence, and outcome-focused models. Your criteria can include agent communication, SOP compliance, and performance metrics like resolution rate or conversion. The goal is to evaluate the full customer journey, not just one part.

SeatGeek built a hybrid scorecard using MaestroQA. Initially, they focused on interaction quality: tone, clarity, and empathy in customer service. However, as they grew, they added outcome-based metrics like FCR and efficiency to track how well agents performed at scale.

✅ Best For: Companies with multi-dimensional support goals, sales-driven support teams, and teams balancing experience, efficiency, and compliance.

✅ Key Focus Areas: Agent behavior and communication, process accuracy and SOP compliance, and measurable outcomes like resolution and conversions.

The QA scorecard template you can use for hybrid analysis:

| Metric | Type | Score (1–5 or Yes/No) |

|---|---|---|

| Customer Experience | Interaction-Based | |

| SOP Compliance | Process-Adherence | |

| First-Call Resolution | Outcome-Focused | |

| Sales Conversion Rate | Outcome-Focused |

Customize this based on your KPIs. Some metrics may use a 1–5 scale, and others may just be Yes/No.

💡 Pro Tip: Hybrid scorecards are great for teams with mixed KPIs, like support teams that also handle upsells or compliance. They give you full visibility without creating separate systems.

5. Team performance scorecards

Sometimes, it’s not about how well one agent performs but how the entire team operates. Team performance scorecards help you measure shared outcomes and track how the group works toward collective goals.

The criteria here focus on team-level metrics like average handling time, CSAT, escalation rates, and internal contributions such as documentation updates or knowledge sharing. It’s about identifying bottlenecks, improving workflows, and holding the team accountable as a unit.

In IT helpdesks, where tickets are routed to whichever agent is available, team scorecards track trends like backlog size, escalation rates, and how often knowledge base articles are updated. This gives managers insight into process issues instead of focusing on individual performance.

✅ Best For: IT or internal support desks, shared inbox support, and Support pods or shift-based teams with rotating responsibilities.

✅ Key Focus Areas: Handling speed and workload balance, collective CSAT/NPS scores, process ownership and internal contributions, and Escalation and handoff quality

The QA scorecard template you can use for team performance analysis:

| Metric | Team Target | Actual Performance |

|---|---|---|

| Average Handling Time | ≤5 minutes | |

| Team CSAT Average | ≥85% | |

| Escalation Rate | ≤5% | |

| Knowledge Sharing | % of agents who contribute to internal documentation |

💡 Pro Tip: Team scorecards encourage agents to work together—not compete. Use them to highlight shared wins, not just individual metrics.

6. Role-specific scorecards

Not every support role looks the same, but your QA scorecard shouldn’t treat them like they do. A billing specialist, a technical support agent, and a frontline rep each have different responsibilities and success metrics. Role-specific scorecards are built to reflect those differences.

Your criteria should match what success looks like for the role. For billing reps, that might be policy knowledge and accuracy. For technical agents, it could be troubleshooting skills. For general support, it’s tone and issue resolution. A one-size-fits-all scorecard can miss what matters in each function.

Let’s say your team includes tech specialists, frontline support agents, and billing reps. Each role requires a different focus: troubleshooting accuracy for techs, clarity and tone for support agents, and policy understanding for billing.

A role-specific scorecard lets you focus on their key skills, knowledge areas, and outcomes that define success for each role.

✅ Best For: Multi-role support teams and specialized roles (tech support, billing, customer service).

✅ Key Focus Areas: Job-specific KPIs (e.g., resolution accuracy, policy adherence, upsell success), communication or technical skills based on role type, and role-aligned compliance or soft skills.

The QA scorecard template you can use for role specific analysis:

| Role | Metric | Score (1-5) |

|---|---|---|

| Technical Specialist | Accuracy of Troubleshooting | |

| Support Agent | Communication Skills | |

| Billing Specialist | Policy Knowledge |

💡 Pro Tip: Weigh metrics based on role priorities. A tech specialist might be scored more heavily on accuracy, while a support agent might be scored more on tone and resolution time.

With so many scorecard options, how do you decide which is right for your team? Here’s a quick guide to help you match the right scorecard to your business priorities.

How to Choose the Right QA Scorecard?

Your QA scorecard should reflect your biggest priorities—speed, empathy, compliance, or consistency. Start by asking: What’s the one thing you want your support team to get better at? That answer should guide the type of scorecard you use.

Here’s how to align your goals with the scorecard that best supports them.

- If your goal is to improve how agents talk to customers (tone, empathy, clarity) → Go with an Interaction-Based Scorecard.

- If your team must follow strict processes or regulations → Use a Process-Adherence Scorecard.

- If you care most about outcomes like resolution rates, CSAT, or First Contact Resolution (FCR) → Choose an Outcome-Focused Scorecard.

- If you want to measure both soft skills and hard results → Build a Hybrid Scorecard.

- If collaboration is a priority and you work from a shared inbox or pod system → Use a Team Performance Scorecard.

- If your team has different roles like billing, tech support, or onboarding → Use Role-Specific Scorecards.

The easiest way to choose a scorecard is to start with your biggest challenge. Are customers unhappy? Are issues slipping through the cracks? Or are compliance errors becoming a risk? Once you know the problem, decide what kind of feedback will actually help fix it.

This can be coaching agent behavior, making sure processes are followed, or tracking measurable results. From there, pick the scorecard type that aligns with that goal. Don’t wait for a perfect setup. You can start with what fits your current needs, put it into practice, and adjust as you go.

Still wondering if a QA scorecard is worth the effort? Here’s how it can transform your team’s performance.

Common QA Scorecard Mistakes (and How to Avoid Them)

A QA scorecard only works if it’s applied with clarity and purpose. But too often, teams fall into patterns that make it ineffective, like using vague criteria, skipping calibration, giving unhelpful feedback, focusing only on scores, ignoring customer input, and treating QA as a standalone task instead of a coaching tool.

To make your QA process truly performance-driven, avoid these eight mistakes and fix them:

1. Vague or subjective criteria. Rewrite any category that uses subjective terms like “friendly tone” or “positive attitude.” Instead, specify: “Used the customer’s name,” “Paused to confirm understanding,” or “Avoided interrupting.”

2. Run monthly calibration sessions using real conversations. Select 2–3 live interactions, have all reviewers score them independently, then compare results. Align on what each score means and adjust rubrics if needed.

3. Write feedback that includes a direct recommendation. After every feedback, include one sentence that starts with “To improve…” For example: “To improve clarity, reduce jargon when explaining account details.”

4. Attach a written comment to every score below the maximum. If an agent doesn’t get full marks, explain exactly why. For instance: “Scored 3/5 on resolution because the agent didn’t summarize the next steps before ending the call.”

5. Incorporate customer feedback into your QA review process. Add CSAT or NPS results to the evaluation form. Reviewers should reference customer comments when scoring areas like empathy or resolution satisfaction.

6. Turn every scorecard into a documented coaching session. After scoring, schedule a 10-minute 1:1. Use a simple template: one strength, one area to improve, and a next-step action (e.g., shadowing another agent, script revision).

7. Block time every quarter to review and revise the scorecard. Review scoring trends and agent feedback. Remove any question that’s always maxed out or no longer reflects current processes. Add new criteria if your product or support approach has changed.

8. Collect structured agent input before rolling out changes. Send out a short internal survey or host a 30-minute feedback session. Ask agents what criteria they feel are fair, what’s unclear, and where the scorecard doesn’t reflect their day-to-day work.

If your QA scorecard doesn’t evolve, guide, and drive clear next steps, then it’s not doing its job. These changes make sure that your evaluations lead to measurable improvements.

Key Best Practices for QA Scorecard

Once your QA scorecard is live, the real work begins. A well-designed scorecard is only useful if it’s consistently applied, regularly reviewed, and actively used to coach and improve.

To make sure it stays useful and aligned with your goals, focus on eight key practices: align with business goals, involve agents, coach (don’t micromanage), calibrate consistently, streamline scoring, review criteria regularly, reduce reviewer friction, and use insights to improve processes.

Here’s how to put each into action:

- Align with business goals: Make sure your QA scorecard supports the outcomes that matter, whether it’s lowering churn, improving CSAT, or increasing resolution speed. This ties day-to-day performance directly to strategic priorities.

- Involve agents in shaping the scorecard: Agents are more likely to engage with a process they helped create. Collect feedback before rollout, test new criteria with a small group, and regularly ask what’s working (or not).

- Coach with the data, don’t micromanage: Avoid using the scorecard to tally wins and losses. Use trends to spark coaching conversations, highlight strengths, and guide improvement.

- Calibrate consistently to stay objective: Host regular sessions where QA reviewers score the same calls and align on interpretation. This sharpens consistency and keeps the evaluation process fair, especially across large teams.

- Improve the scoring system: Cut down bloated forms or repetitive criteria. Group related actions, use dropdowns or severity tags, and focus only on what drives quality outcomes.

- Review and refine the scorecard every quarter: Change the process as your business goals shift. Revisit your QA scorecard every 3–6 months to ensure it’s still relevant, accurate, and focused on current priorities.

- Make the review process easy for evaluators: Build automations, reduce manual effort, and ensure reviewers can complete scorecards quickly without sacrificing accuracy.

- Use QA trends to improve systems, not just people: If multiple agents struggle with the same issue, it’s likely a workflow or documentation problem. Use aggregated data to drive cross-functional improvements.

When you treat it as a living part of your team’s workflow, it becomes a driver of consistent performance.

Making QA Scorecards work for you

A strong QA scorecard isn’t just a checklist; it helps you track and improve performance.

Whether you’re coaching agents, ensuring compliance, or improving customer experience, your scorecard keeps the team focused on what matters: clarity, consistency, and growth.

- Start simple.

- Pick one scorecard that solves your most urgent problem.

- Put it into practice.

- Review the results.

- Then improve it.

Your scorecard will only get better with use, and so will your team.

Frequently Asked Questions

1. How to create a scorecard?

A QA scorecard is a tool that helps you review customer conversations in a structured way. It shows whether agents are following the right process, communicating clearly, and resolving issues effectively. You use it to spot what’s working, what’s not, and where your team can improve.

2. How do I create a QA scorecard?

Start with the basics: What do you want to improve? Maybe it’s faster responses, fewer escalations, or a better tone. Once you’re clear on your goals, choose 4–6 things you want to score, like empathy, accuracy, or resolution quality. Assign a weight to each one based on how important it is. Then pick a simple scoring system (1–5), and test the scorecard with real tickets before rolling it out to the team.

3. Who should create and maintain the QA scorecard?

It should be a team effort. Support managers bring the big-picture goals. QA analysts know how to score fairly. Agents can flag what’s realistic in day-to-day work. Once it’s up and running, one person, usually a QA lead or manager, should own the scorecard, keep it updated, and ensure it reflects what the team aims for.

4. How to choose metrics for your QA scorecard?

Focus on the actions that drive great customer experiences. Think: Did the agent resolve the issue? Did they show empathy? Did they follow the process? Don’t add items just because they’re easy to track — only score what matters to customers or your business.

5. How often should QA evaluations be done?

Pick a rhythm that works for your team and stick to it. Weekly or biweekly reviews work well for most. What’s more important than frequency is consistency. Regular QA reviews help you catch issues early, coach with real examples, and build lasting habits.

6. What is a good QA score?

There’s no magic number, but most teams aim for something in the 85% to 95% range. What matters is improvement. Are scores going up? Are common mistakes going down? Use the score as a guide, not a judgment.

7. What tools can help build QA scorecards?

You can start with something as simple as Google Sheets. But if you want to scale, tools like Hiver, MaestroQA, Klaus, or Playvox make tracking scores, leaving comments, and spotting patterns over time easier.

8. Is there a QA scorecard template I can use?

Yes, most QA platforms offer templates you can tweak. If you’re building your own, try a simple framework like the 4Cs: Clarity, Connection, Compliance, and Completion. It helps you cover all the bases without overcomplicating things.

9. What’s the difference between a QA scorecard and a CSAT survey?

A QA scorecard is what you use to evaluate the quality of support. A CSAT survey is what your customers use to tell you how they feel. Both are useful, but they measure different things. You need both to see the whole picture, like internal performance and external perception.

Start using Hiver today

- Collaborate with ease

- Manage high email volume

- Leverage AI for stellar service

Skip to content

Skip to content